Imagine a world where your favorite websites aren’t just pretty, but truly smart. A world where online tools understand you better, respond instantly, and keep your personal information private. This doesn’t have to be science fiction; it’s the promise of a new standard in web development: the Web Neural Network API, or WebNN.

For too long, the incredible power of Artificial Intelligence (AI) and Machine Learning (ML) has largely lived on distant, powerful servers in the cloud. If a website wanted to use AI to, say, recognize a cat in your photo or translate a sentence, it had to send your data all the way to a server, wait for the processing, and then get the result back. This “round trip” could be slow, expensive for the website, and raise privacy concerns since your data was leaving your device. The reality is that full round trip transaction is probably a non-material transaction to the user. However, with the proliferation of AI agents taking place in 2025, the amount of these back-and-forth calls will only add to massive consumption of bandwidth and resources. This could lead to higher cost to operate these AI applications and may begin to raise scalability questions.

Enter WebNN: The new brain for your browser

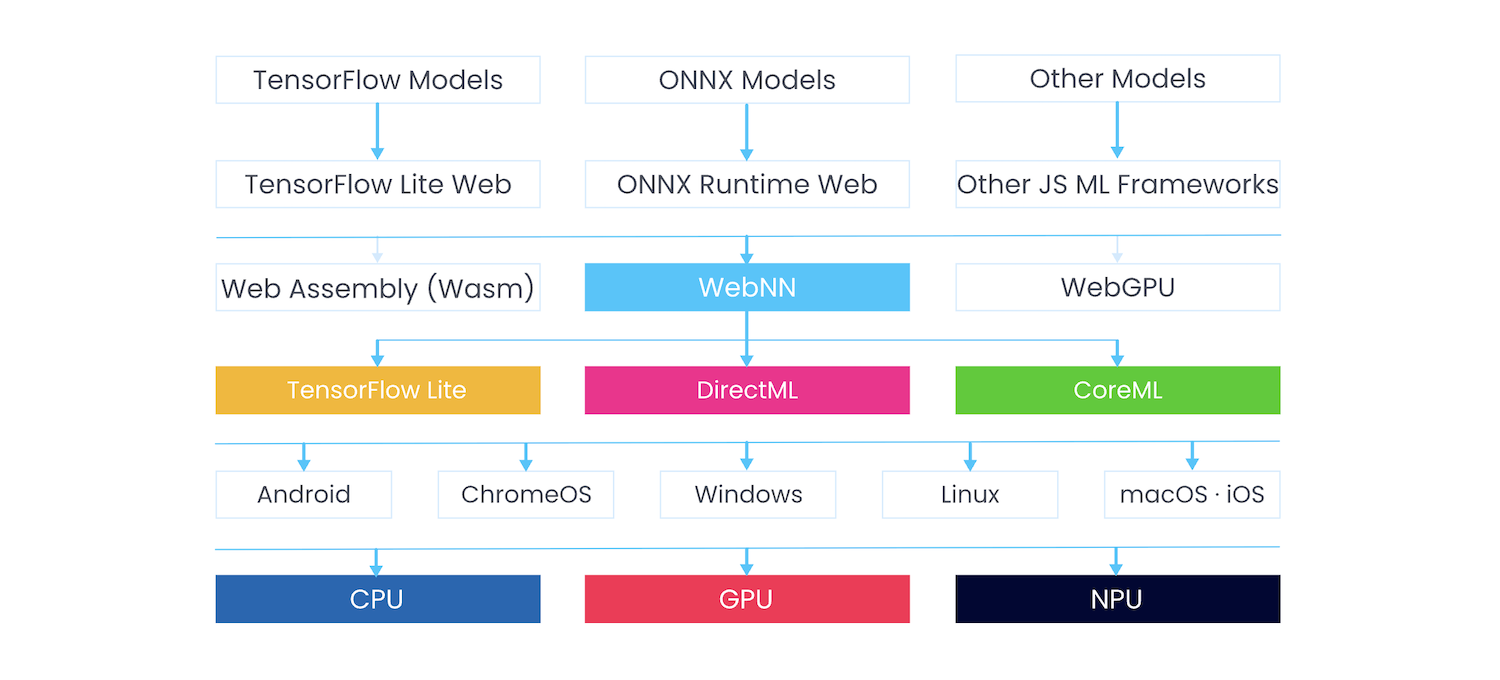

The WebNN API is like giving your web browser a built-in AI processor. It’s a technical bridge that allows web developers to tap into your device’s own computing power – whether it’s your laptop’s CPU, its graphics card (GPU), or even dedicated AI chips (NPUs) found in newer smartphones and computers–to run machine learning models directly. For example, Apple is very bullish and forward leaning with their Neural Engine M-chips.

Think of the benefits as such:

- Blazing Fast Performance: No more waiting for data to travel to and from a server. AI tasks happen instantly, right on your device. This means smoother, more responsive web applications.

- Privacy First: With WebNN, your sensitive data (like your photos, videos, or even voice) stays on your device. It’s processed locally, drastically reducing privacy risks and keeping your information safe.

- Works Anywhere, Anytime: Once the AI model is loaded, WebNN allows applications to function even without an internet connection. Imagine using a smart filter on your photos during a flight, or getting real-time assistance in a remote area with no Wi-Fi.

- Reduced Costs for Developers: By offloading processing to user devices, websites can significantly cut down on their server costs, making advanced AI features more accessible to everyone.

A Glimpse Under the Hood: The Magic of AI Inference on Your Device

For those curious about how this all works, WebNN provides a JavaScript interface via navigator.ml. The real power comes from loading pre-trained AI models that have already learned to do complex tasks. WebNN then lets your browser run these models incredibly efficiently, right on your device.

To help visualize this process, here’s a simple sequence diagram (figure 1) showing the key steps when a web application uses WebNN for an AI task:

Figure 1: High level flow of interactions using WebNN API

The previous sequence diagram (figure 1) illustrates how your interaction triggers the web application, which then uses the browser’s WebNN capabilities to engage your device’s hardware. The heavy lifting of the AI model’s calculations happens directly on your device, with the results quickly returned to the web application.

Simple example

To illustrate this further, let’s consider a very simple AI “brain” that can classify a number as “small” or “large.” While a human wouldn’t need AI for this, it perfectly showcases how a pre-trained model takes an input and provides an AI-driven classification.

First, your web application would need to load this tiny AI brain. In a real-world scenario, this ‘brain’ (what we’ll call preTrainedModelGraph in the below pseudo code) would be a sophisticated AI model for tasks like image recognition or language processing. It’s typically loaded from a dedicated model file (like an ONNX file) and then prepared by WebNN for optimal performance on your device’s specific hardware. For our super simple example, we’ll imagine it’s ready to go:

async function loadMyTinyClassificationModel() {

return {

compute: async (inputs) => {

const inputVal = inputs['input_feature'].data[0];

const probSmall = inputVal <= 50 ? 0.95 : 0.05; // AI's confidence if 'small'

const probLarge = inputVal <= 50 ? 0.05 : 0.95; // AI's confidence if 'large'

return {

'output_prediction': { data: new Float32Array([probSmall, probLarge]) }

};

}

};

}

Once our tiny AI classification model is loaded (within the loadMyTinyClassificationModel function above), using it is incredibly straightforward. You simply feed it the input data (the number you want to classify), and WebNN handles the heavy lifting, running the complex calculations inside the AI model locally. The result is an immediate classification, all without your data ever leaving your browser:

async function classifyNumberWithWebNN(inputNumber) {

// Check if WebNN is available in the browser.

if (!navigator.ml) {

console.warn("WebNN API not supported.");

return "Browser AI features unavailable.";

}

try {

const preTrainedModelGraph = await loadMyTinyClassificationModel();

// Prepare the input data for the AI model.

const inputs = {

'input_feature': { data: new Float32Array([inputNumber]) }

};

// Run the AI model on your device. This is the core WebNN action.

const outputs = await preTrainedModelGraph.compute(inputs);

// Interpret the AI's output.

const resultProbs = outputs['output_prediction'].data;

const isSmall = resultProbs[0] > resultProbs[1]; // Decide based on probabilities.

return isSmall ? `The number ${inputNumber} is classified as 'small'.` : `The number ${inputNumber} is classified as 'large'.`;

} catch (error) {

console.error("WebNN classification failed:", error);

return "Error with AI classification.";

}

}

This snippet demonstrates the core flow: the application prepares input data and then calls compute on the pre-loaded AI graph. The magic happens behind the scenes, leveraging your device’s hardware for speed and local processing. While our example is simple, imagine this compute call processing live video for background blur during a conference call, or text for smart summarization, or even live language translation while watching videos or in meetings.

The Journey Ahead

The Web Neural Network API is currently a Candidate Recommendation Draft by the W3C, meaning it’s well into its standardization process. Browser vendors and developers are actively collaborating to refine it, ensuring it’s robust, performant, and widely compatible. The goal is to make it a fundamental part of the web platform, empowering a new generation of intelligent, private, and lightning-fast web applications. Be sure to follow along by monitoring the Web Machine Learning Working Group and enagage in the Web Machine Learning Community Group (WebML CG)

Get ready for a smarter web, where the brains are in your browser!